Meta SAM 3 marks a major leap forward in computer vision technology. Linking language to visual elements in images or videos has always been challenging, but this new model overcomes key limitations. Traditional segmentation relied on fixed label sets, while Meta SAM 3 introduces flexibility that adapts to any user-defined prompt.

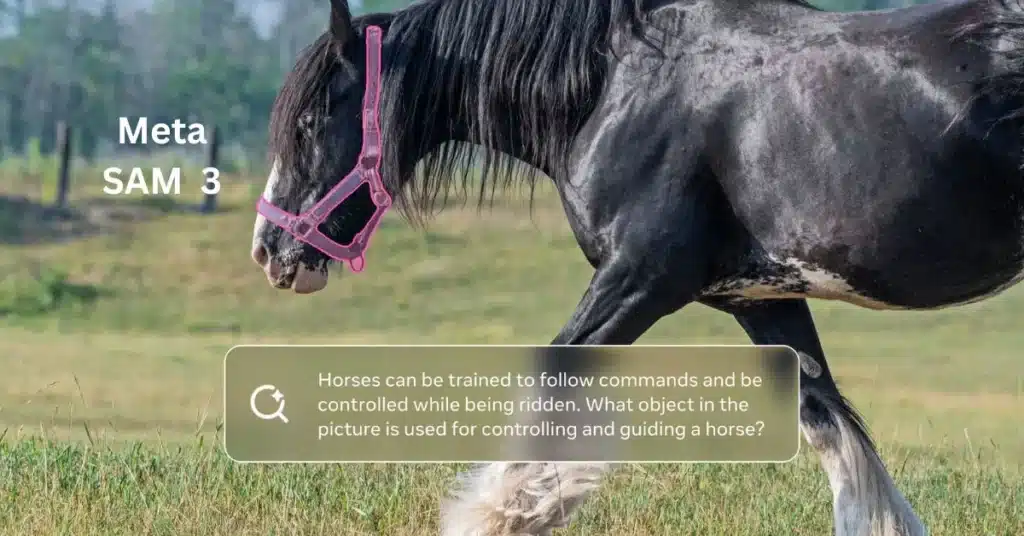

Unlike past models that could only identify predefined objects such as “person” or “car,” Meta SAM 3 introduces a promptable concept segmentation system. This enables the model to find and segment any concept defined through text or exemplar prompts, extending its capabilities to capture subtle or rare visual details never handled before.

To evaluate the model’s innovative approach, Meta developed the Segment Anything with Concepts (SA-Co) benchmark. This new dataset tests the model’s ability to recognize an extensive vocabulary of visual concepts in images and videos. By releasing SA-Co publicly, Meta supports community research, reproducibility, and the development of more inclusive AI segmentation systems.

Meta SAM 3 offers extensive prompt modalities for greater flexibility. Users can input concept prompts like noun phrases or image exemplars, along with visual cues such as masks, boxes, or points—methods introduced in SAM 1 and SAM 2. This versatility allows for improved segmentation, especially for concepts difficult to describe using text alone.

With performance gains reaching twice those of previous models, Meta SAM 3 represents a major breakthrough in visual AI. It not only refines object segmentation but also serves as an advanced perception layer for multimodal large language models. This makes it a powerful foundation for next-generation AI applications across media, research, and interactive tools.

Near Real-Time Performance and Extensive Benchmarking of Meta SAM 3

Meta SAM 3 delivers near real-time performance, processing images with over 100 detected objects in approximately 30 milliseconds using high-performance GPUs. Its video segmentation includes a memory-based tracker that maintains object continuity and temporal disambiguation, which boosts accuracy in multi-object tracking scenarios.

Publicly releasing the SA-Co benchmark with over 214,000 unique concepts across 126,000 images and videos, Meta fosters innovation and reproducibility in concept segmentation research. This dataset vastly exceeds prior benchmarks in scale and diversity, pushing open-vocabulary AI to handle real-world visual complexity.

Meet SAM 3, a unified model that enables detection, segmentation, and tracking of objects across images and videos. SAM 3 introduces some of our most highly requested features like text and exemplar prompts to segment all objects of a target category.

— AI at Meta (@AIatMeta) November 19, 2025

Learnings from SAM 3 will… pic.twitter.com/mB4OL4xgXR