China’s DeepSeek Disrupts Frontier AI Formula

China’s DeepSeek is making headlines after releasing its V3.2 AI model, which demonstrates reasoning abilities rivaling OpenAI’s GPT-5. Unlike U.S. tech giants that rely on vast computing budgets, DeepSeek’s system achieves advanced results using fewer total training FLOPs, signaling a shift toward smarter and more efficient AI development practices.

Transforming Enterprise AI Adoption Strategies

The launch of DeepSeek V3.2 is a game-changer for businesses seeking advanced AI at a manageable cost. Available as open-source software, the model gives organizations direct control over deployment. This practical approach aligns with the industry’s growing emphasis on cost-efficiency in AI implementation, making sophisticated reasoning tools more accessible.

Gold-Medal Achievements Highlight Innovation

The Hangzhou-based company unveiled both DeepSeek V3.2 and DeepSeek-V3.2-Speciale. The latter delivered gold-medal scores at the 2025 International Mathematical Olympiad and Informatics Olympiad, matching feats previously exclusive to top-secret U.S. AI models. These achievements underscore DeepSeek’s rise as a world-class AI contender.

Navigating Semiconductor Restrictions

DeepSeek’s progress is especially significant under U.S. export restrictions limiting access to advanced chips. Despite these burdens, the company matched top reasoning benchmarks using available hardware, proving that smart engineering can partly offset geopolitical trade pressures and secure high-level performance.

Innovations in Sparse Attention Reduce Complexity

Much of DeepSeek’s success stems from its technical ingenuity, notably the DeepSeek Sparse Attention (DSA) mechanism. Unlike traditional models, DSA’s selective processing dramatically lowers computational intensity. By focusing only on the most relevant tokens per query, it achieves high accuracy with reduced hardware demands, challenging old norms.

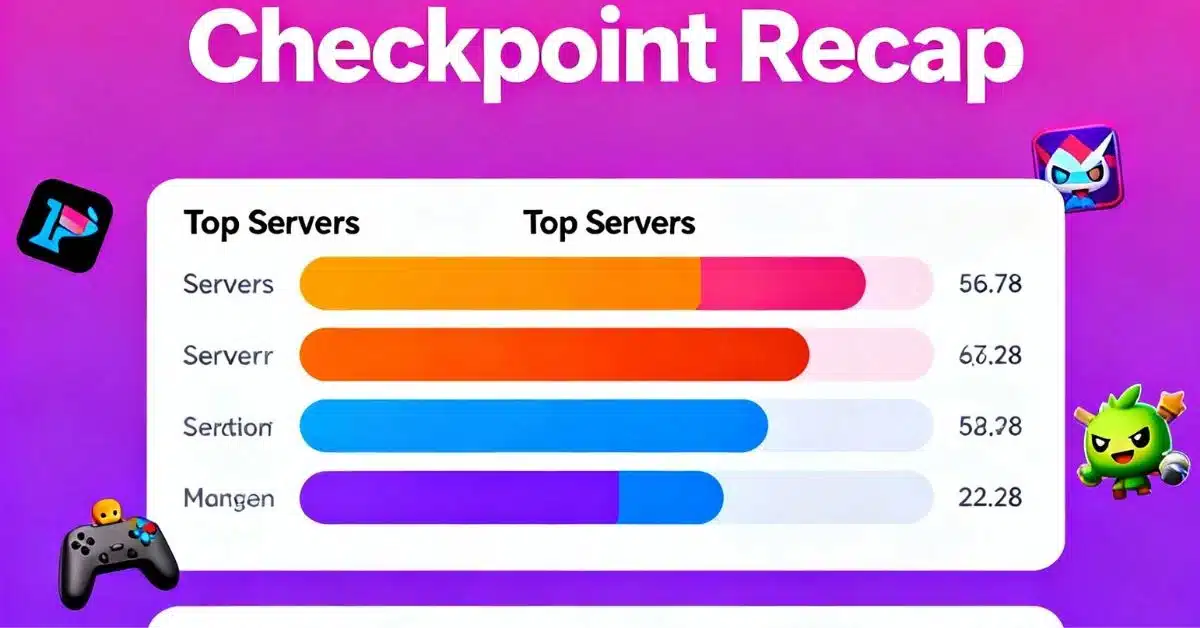

Delivering Record Benchmark Results

The base DeepSeek V3.2 scored 93.1% on AIME 2025 math problems and posted a notable Codeforces rating, aligning it with GPT-5 in reasoning. The Speciale variant further excelled with near-perfect marks in global math contests, cementing DeepSeek’s place among the industry’s most capable models in technical evaluation.

Reinforcement Learning Over Raw Power

A vital part of DeepSeek’s approach involves investing in reinforcement learning optimization. The company devoted over 10% of pre-training costs to post-training computational refinement, unlocking advanced problem-solving without brute-force scaling. This engineering mindset enhances model efficiency, setting a precedent for other AI developers.

Advancing Token Efficiency and Tool Use

DeepSeek V3.2 introduces novel context management for tasks involving external tools. By retaining relevant reasoning instead of discarding it after every message, the system improves token efficiency and multi-turn performance. The model also underwent rigorous pre-training with nearly a trillion tokens, optimizing workflows for real-world software environments.

Practical Utility in Coding and Problem-Solving

The model excels in practical settings, achieving 46.4% on Terminal Bench 2.0 and strong results in software engineering benchmarks, including SWE-Verified and SWE Multilingual. Agentic task tests show V3.2 outperforms earlier open-source models in tool use and multi-step reasoning, advancing AI’s integration into enterprise development.

Open Access and Community Impact

Enterprises can now deploy DeepSeek V3.2 from the Hugging Face repository, enjoying full transparency and adaptability without vendor lock-in. The Speciale variant is API-only due to higher resource needs, offering organizations flexibility. The community has praised DeepSeek’s documentation and sees its accomplishments as a turning point in making high-end AI solutions attainable.